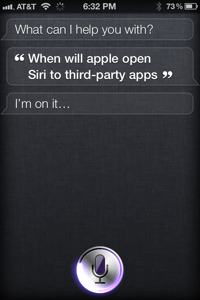

[ In a previous post we looked at some of the capabilities of Siri, Apple’s intelligent personal assistant. Now let’s venture a little deeper into how integration with third-party services might work.

I have to point something out that should be obvious: I have no inside knowledge of Apple’s product plans. This note is purely based on the publicly demonstrated features of Siri sprinkled with a dose of speculation.

Although I had hoped Apple would offer deeper Siri integration with apps in iOS6, what they ended up showing during the WWDC 2012 keynote presentation was far more incremental: new back-end data services (sports scores, movie reviews, and local search) and the ability to launch apps by name. ]

(Image via Apple)

(Image via Apple)

In the previous section we ended by noting which services Siri currently integrates with and how. But how might third-parties, at some point, integrate into Siri as first-class participants?

Before we step into the deep end we should distinguish between several categories of services that Siri currently supports:

- On device with a public API (i.e. calendar, alarms, location-based services).

Â

- On device without a public API (notes, reminders).

- Those that require access to server-side features (Yelp, WolframAlpha, sports scores).

First…Â

The Server-side

For any service to tie-in with Siri there are two ways to do the integration tango:

- Comply with an interface that Siri expects (for example, a set of RESTful actions and returned JSON data).

- Offer a proxy service or glue handlers to bridge the gap between what Siri expects and what the service/app offers.

This means Apple would have to publish a set of web APIs and data protocols and validate that third parties have implemented them correctly. What’s more the third parties may have to provide a guarantee of service since to the end-user any service failure would likely be blamed on Siri (at least, until it starts quoting Bart Simpson).

But Siri is a little different. Just following an interface specification may not be enough. It needs to categorize requests into sub-domains so it can narrowly focus its analytic engine and quickly return a relevant response. To do so it first needs to be told of a sub-domain’s existence. This means maintaining some sort of registry for such sub-domains.

For Siri to be able to answer questions having to do with say, real estate, an app or web-service needs to tell Siri that henceforth any real-estate related questions should be sent its way. Furthermore, it needs to provide a way to disambiguate between similar sounding requests, say, something in one’s calendar vs. a movie show time. So Siri will have to crack open its magic bottle a little and show developers how they can plug themselves into its categorization engine.

In the Android world we see a precedence for this where applications can register themselves as Intents (for example, able to share pictures). At runtime an Android app can request that an activity be located that can handle a specific task. The system brings up a list of registered candidates and asks the user to pick one then hands over a context to the chosen app:

This registry is fairly static and limited to what’s currently installed on the device. There has been an effort to define Web Intents but that’s more of a browser feature than a dynamic, network-aware version of Intents.

This registry is fairly static and limited to what’s currently installed on the device. There has been an effort to define Web Intents but that’s more of a browser feature than a dynamic, network-aware version of Intents.

It should be noted that Google Now under the latest JellyBean version of Android has opted for a different, more Siri-like approach: queries are sent to server and are limited to a pre-defined set of services. We may have to wait for Android Key Lime Pie or Lemon Chiffon Cake (or something) before individual apps are allowed to integrate with Google Now.

Context is King

Siri has proven adept at remembering necessary contextual data. Tell it to send a message to your ‘wife’ and it will ask you who your wife is. Once you select an entry in your addressbook it remembers that relationship and can henceforth handle a task with the word ‘wife’ in it without a hitch.

What this means, however, is that if a similar user-friendly tack is taken, the first app to plant its flag in a specific domain will likely be selected as the ‘default’ choice for that domain and reap the benefits till the end of time. It will be interesting to see if Apple will allow for applications to openly declare domains of their own or create a sort of fixed taxonomy (for example, SIC codes) within which apps must identify themselves. It’ll also be interesting to see if the appstore review process will vet apps for category ‘poaching’ or even classic cybersquatting.

It’s likely such a categorization will be implemented as application meta-data. In the case of web-services it will likely appear as HTML metadata, or a fixed-name plist file that Siri will look for. On the client side it’ll likely show up the same way Apple requires apps to declare entitlements today — in the application plist.

So for a web server to become Siri compiliant, it’s likely it will need to:

- Respond to a pre-defined Siri web interface and data exchange format.

Â

- Provide metadata to help Siri categorize the service into specific subdomains, and

Â

- Optionally: offer some sort of Quality of Service guarantee by showing that they can handle the potential load.

Currently Siri is integrated with a few back-end services hand-picked by Apple and baked into iOS. To truly open up third-party access iOS would have to allow these services to be registered dynamically, perhaps even registered and made available through the app store as operating system extensions. This could potentially give rise to for-pay Siri add-ons tied into subscription services.Â

’nuff said. Let’s move on to…

The Client Side

What if you have an app that lets you compose and send custom postcards, or order coffee online, or look up obscure sports scores? How could apps like this be driven and queried by Siri?

To see an example of this let’s look at how Siri integrates with the weather app. When we ask it a weather related question (“How hot will it be tomorrow around here?”):

To answer this question it has to go through a number of steps:

- Translate the voice query to text.

Â

- Categorize the query as weather related (“hot”).

Â

- Find our current location via location services (“around here”).

Â

- Determine when “tomorrow” might be, given the user’s current locale.

Â

- Relay a search to the weather server for data for the particular date and location. It understood ‘how hot’ as looking for the ‘high’ temperature.

Â

- Present the data in a custom card to the user. Note that it responded with both a text response as well as a visual one — with ‘tomorrow’ highlighted in the results.

Weather is one of those services where the search can be performed directly from Siri’s back-end service or initiated on the device — either through the existing stock weather app or a code framework with a private API.

Imagine where your app might fit in if the user were to make a similar voice inquiry intended for it.

- Siri might need to be given hints to perform speaker-independent voice-to-text analysis. It appears that at least part of this conversion is done on the server (turn networking off and Siri stops working) so wherever the actual text conversion code resides will need to have access to these hints (or voice templates).

Â

- To categorize the text of the request as belonging to your subdomain Siri would need to know about that subdomain and its association to your app.

Â

- Location would be obtained via on-device location services.

Â

- Time would be obtained via on-device time and locale settings.

Â

- The request would have to be relayed to your app and/or back-end service, in some sort of normalized query form and expect to get the data back in a similar form.

Â

- The result could be presented to the user either via spoken text or shown on a graphic card. If it is to be presented as text there has to be enough context so Siri can answer follow-up questions (“How hot will it be tomorrow?” “How about the following week?”). If it is to be shown in a visual card format then the result has to be converted into a UIView either by Siri or your code.

For steps #1, #2, and #5, and #6 to work, Siri has to have a deep understanding of your app’s capabilities and tight integration with its inner workings. It also needs to be able to perform voice to text conversion, categorize the domain, and determine if it’s best handled by your app before actually launching your app. What’s more it would need to to send the request to your app without invoking your user-interface!

In other words for Siri to work with third party apps, apps would have to provide a sort of functional map or dictionary of services and to provide a way for Siri to interact with them in a headless fashion.

Sound familiar?

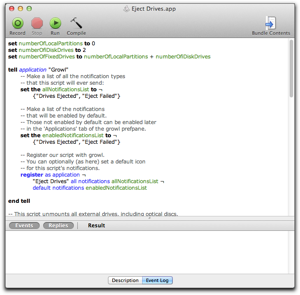

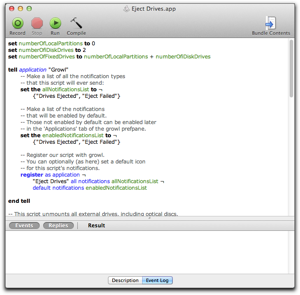

It’s called AppleScript and it’s been an integral part of Mac desktop apps since the early 1990’s and System 7.

For desktop apps to be scriptable they have to publish a dictionary of actions they can perform in a separate resource that the operating system recognizes without having to invoke the actual app. The dictionary lists the set of classes, actions, and attributes accessible via scripting.Â

For Siri to access the internals of an app a similar technique could be used. However, there would have to be additional data provided by the app to help Siri better match the natural language needs in the user’s language.

The dictionary would have to exist outside the app so the OS could match the user query to an app’s capabilities quickly without having to launch the app beforehand. It would likely be ingested by the OS whenever a new app is installed on the device just as the application plist is handled today. Siri in iOS6 will have the ability to launch an app by name. It is likely it obtained the app name via the existing launch services registry on the device.

But a dictionary alone is not enough. Siri would need to interact with an app at a granular level. iOS and Objective-C already provide a mechanism to allow a class to implement a declared interface. It’s called a protocol and iOS is packed full of them. For an app to become Siri compliant it would have to not only publish its dictionary but also expose classes that implement a pre-defined protocol so its individual services can be accessed by Siri.Â

The implication of making apps scriptable will be profound for the developer community. To be clear I don’t think the scripting mechanism will be AppleScript as it exists today. The driver of this integration mechanism will be Siri so any type of automation will have to allow apps to function as first-class citizens in the Siri playground. This means natural language support, intelligent categorization, and contextual data — capabilities beyond what regular AppleScript can provide. But given Apple’s extensive experience with scripting there is a well-trodden path for them to follow.

Once an app can be driven by Siri there’s no reason why it couldn’t be also driven by an actual automated script or a tool like Automator. This would allow power-users to create complex workflows that cross multiple apps as they can do today under Mac OS. If these workflows themselves could be drivable by Siri then a raft of mundane, repetitive tasks could be managed easily via Siri’s voice-driven interface.

Let’s also consider that if apps are made scriptable it may be possible for them to invoke each other via a common interface. This sort of side-access and sharing between apps is currently available on the Mac but is sorely lacking in iOS. If allowed, it would open up a whole world of new possibilities.

During a conversation over beers with a fellow developer it was pointed out that Apple has no incentive to open up Siri to third-parties. Given the competitive comparisons drawn between Google Now and Siri I’m hopeful Apple will move more quickly now.

See… it shouldn’t be long 🙂

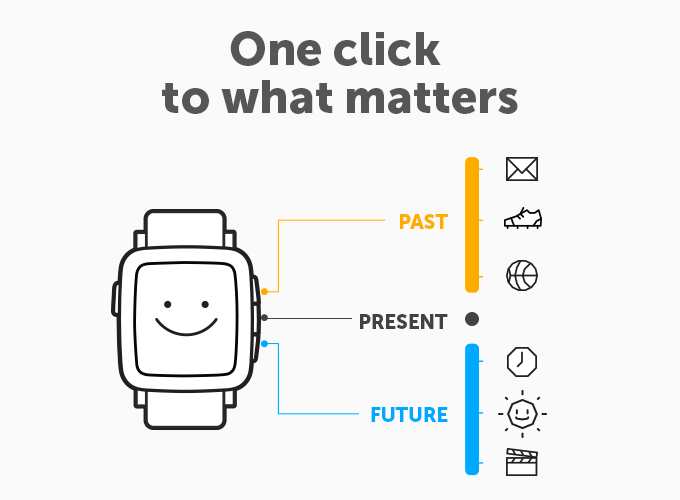

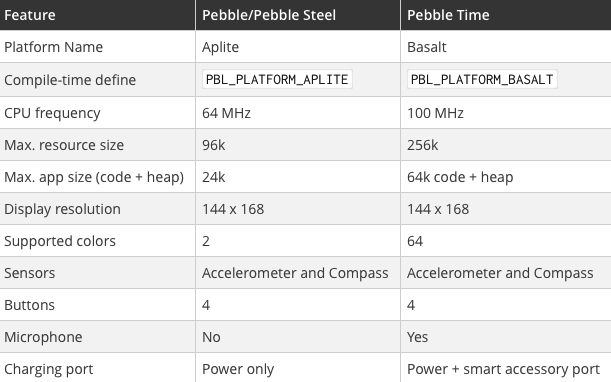

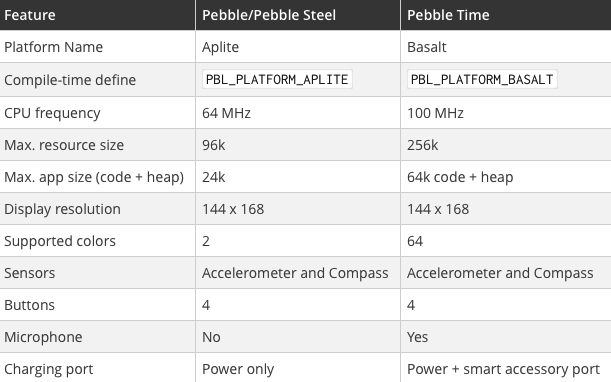

Pebble just announced their 2nd-gen watch a couple days ago. They put it up on Kickstarter yesterday morning for a 31-day fundraising session. They reached their minimum in about 20 minutes.  As of the end of day 2 they were getting close to the $10M mark and they’re well on their way to breaking the Kickstarter record currently held by a cooler.The device itself is going to be running an ARM M4 at 100MHz (vs. ARM M3 at half the speed on the first-gen device). It comes with 64KB of RAM, Bluetooth classic and LE, accelerometer, 3D compass, ambient light sensor, and a microphone. The microphone is currently intended for use to send voice replies or record short notes.It’s got a snazzy color e-Ink type display that supports up to 64 colors.

Pebble just announced their 2nd-gen watch a couple days ago. They put it up on Kickstarter yesterday morning for a 31-day fundraising session. They reached their minimum in about 20 minutes.  As of the end of day 2 they were getting close to the $10M mark and they’re well on their way to breaking the Kickstarter record currently held by a cooler.The device itself is going to be running an ARM M4 at 100MHz (vs. ARM M3 at half the speed on the first-gen device). It comes with 64KB of RAM, Bluetooth classic and LE, accelerometer, 3D compass, ambient light sensor, and a microphone. The microphone is currently intended for use to send voice replies or record short notes.It’s got a snazzy color e-Ink type display that supports up to 64 colors. The video on the Kickstarter page is showing pretty smooth animation with very little tearing or blanking refreshes like most B&W eInk displays. Since it’s not backlit, Pebble claims there will be good visibility in daylight.Some folks have been sleuthing around and think these are the display manufacturers: http://www.j-display.com/english/news/2014/20140109.html. Battery life is claimed to be the same 5-7 days.It also has a very interesting hardware add-on port on the side. Not much by way of specs yet but the idea is that 3rd parties will be able to create add-on watchbands that plug into the port. Apparently you can do things like control LED lights, heart-rate sensors, or get extra battery power from the watchband.Software-wise they’re going to try to remain backward compatible with the C-SDK on the first watch. However, a new SDK is supposed to be in the works to allow better support for animation, including integration with their new Timeline interface.

The video on the Kickstarter page is showing pretty smooth animation with very little tearing or blanking refreshes like most B&W eInk displays. Since it’s not backlit, Pebble claims there will be good visibility in daylight.Some folks have been sleuthing around and think these are the display manufacturers: http://www.j-display.com/english/news/2014/20140109.html. Battery life is claimed to be the same 5-7 days.It also has a very interesting hardware add-on port on the side. Not much by way of specs yet but the idea is that 3rd parties will be able to create add-on watchbands that plug into the port. Apparently you can do things like control LED lights, heart-rate sensors, or get extra battery power from the watchband.Software-wise they’re going to try to remain backward compatible with the C-SDK on the first watch. However, a new SDK is supposed to be in the works to allow better support for animation, including integration with their new Timeline interface. The C SDK already had support for simple UI, access to some of the sensors, and HTTP requests via the JS engine proxy baked into the Pebble phone app. The new SDK will presumably simplify the UI, support color and animation, and provide access to the Timeline.There will be a Pebble Meetup in San Francicso tomorrow night that I will be attending. Hope to have more details and (if permitted) take photographs of the device itself.

The C SDK already had support for simple UI, access to some of the sensors, and HTTP requests via the JS engine proxy baked into the Pebble phone app. The new SDK will presumably simplify the UI, support color and animation, and provide access to the Timeline.There will be a Pebble Meetup in San Francicso tomorrow night that I will be attending. Hope to have more details and (if permitted) take photographs of the device itself.

(Image viaÂ

(Image via  This registry is fairly static and limited to what’s currently installed on the device. There has been an effort to defineÂ

This registry is fairly static and limited to what’s currently installed on the device. There has been an effort to define